In the dynamic realm of natural language processing (NLP), the quest for generating coherent, contextually relevant text has been an ongoing challenge. Traditional generative models, while impressive, often struggle to produce responses that truly capture the nuances of human language and context. Enter Retrieval-Augmented Generation (RAG) – a revolutionary approach that enhances the capabilities of Large Language Models (LLMs) by seamlessly integrating external knowledge sources into the text generation process.

Retrieval-Augmented Generation (RAG) optimizes the output of large language models by tapping into authoritative knowledge bases beyond their original training data sources. Large Language Models (LLMs), with their vast volumes of training data and billions of parameters, excel at tasks like question answering, language translation, and text completion. However, RAG extends these capabilities further by leveraging specific domains or an organization's internal knowledge base, all without the need for extensive retraining.

At its core, RAG represents a cost-effective strategy for improving LLM output, ensuring that generated text remains relevant, accurate, and useful across various contexts. By harnessing the synergy between powerful language models and external knowledge sources, RAG opens new avenues for enhancing text generation in NLP and addressing the ever-growing demand for intelligent, context-aware responses.

Understanding Retrieval-Augmented Generation

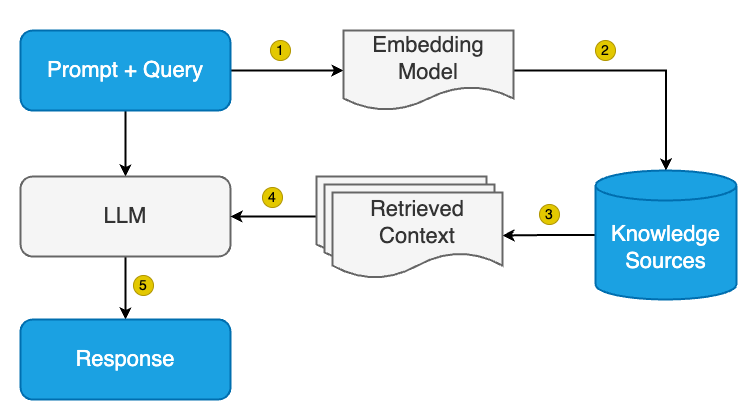

Retrieval-Augmented Generation (RAG) represents a significant advancement in the field of natural language processing, bridging the gap between traditional generative models and external knowledge sources. At its core, RAG is a multi-step process that enhances the capabilities of Large Language Models (LLMs) by integrating relevant information retrieved from external knowledge bases.

- Retrieval Component : In the first phase of RAG, relevant context or passages are retrieved from external knowledge sources based on the input query or context. This retrieval process ensures that the generated responses are informed by authoritative and up-to-date information, beyond what is available in the model's original training data.

- Techniques such as TF-IDF, BM25, or more advanced neural-based retrievers are commonly employed to retrieve relevant information efficiently.

- The retrieved passages serve as the foundation for augmenting the generation process, providing additional context and insights to enhance the relevance and accuracy of the generated text.

- Generation Component : Once the relevant context has been retrieved, it is seamlessly integrated into the generation process to produce a coherent and contextually relevant response. The generation component of RAG utilizes the retrieved information to guide the generation of text, ensuring that the output remains aligned with the retrieved context.

- Large Language Models (LLMs), such as the GPT series, are often used in the generation phase of RAG. These models leverage their vast training data and billions of parameters to generate responses that are fluent and contextually appropriate.

- By incorporating the retrieved context into the generation process, RAG models produce responses that are not only linguistically accurate but also deeply informed by external knowledge sources.

Understanding the synergy between the retrieval and generation components of RAG is crucial for grasping its transformative potential in natural language processing. By seamlessly integrating external knowledge sources into the text generation process, RAG enables LLMs to produce responses that are not only fluent and coherent but also contextually relevant and accurate.

Applications of RAG

The versatility of Retrieval-Augmented Generation (RAG) extends to a wide array of applications within the realm of natural language processing. By harnessing the power of external knowledge sources to augment text generation, RAG has demonstrated remarkable efficacy across various use cases. Below are some notable applications of RAG:

- Question Answering Systems : RAG has proven to be particularly effective in enhancing question answering systems. By retrieving relevant information from knowledge bases or online sources, RAG models can provide more accurate and informative responses to user queries. Whether it's factual questions, complex inquiries, or domain-specific knowledge, RAG-equipped question answering systems excel at delivering precise and contextually relevant answers.

- Text Summarization : Text summarization is another area where RAG shines. By leveraging external knowledge sources to enrich the summarization process, RAG models can produce more comprehensive and informative summaries. Whether it's condensing lengthy documents, extracting key insights, or generating concise summaries of news articles, RAG-enabled text summarization systems offer valuable tools for information retrieval and consumption.

- Dialogue Systems : In the realm of conversational AI, RAG has emerged as a game-changer for dialogue systems. By incorporating external knowledge sources into the dialogue generation process, RAG-equipped chatbots and virtual assistants can engage in more contextually rich and informative conversations with users. Whether it's answering user queries, providing recommendations, or engaging in domain-specific discussions, RAG-enabled dialogue systems offer more engaging and satisfying user experiences.

- Content Generation : RAG has also found applications in content generation tasks such as content creation, storytelling, and creative writing. By leveraging external knowledge sources to augment the generation process, RAG models can produce more diverse, engaging, and informative content. Whether it's generating product descriptions, crafting narratives, or composing marketing copy, RAG-enabled content generation systems offer valuable tools for content creators and marketers.

- Domain-specific Applications : Beyond general-purpose applications, RAG can be tailored to specific domains or organizational knowledge bases. By fine-tuning the retrieval and generation components to suit the requirements of a particular domain, RAG models can offer specialized solutions for industries such as healthcare, finance, legal, and more. Whether it's assisting professionals with domain-specific queries, providing expert advice, or facilitating decision-making processes, domain-specific RAG applications offer tailored solutions to complex challenges.

What are the advantages of RAG

Retrieval-Augmented Generation (RAG) offers a multitude of advantages over traditional generative models, making it a compelling approach for various natural language processing tasks. Below are some key advantages of RAG:

- Enhanced Relevance and Accuracy: by leveraging external knowledge sources, RAG models can produce responses that are more relevant and accurate to the given context. The integration of retrieved information ensures that generated text is informed by authoritative sources, leading to more precise and informative outputs.

- Contextual Understanding : RAG models excel at capturing the nuances of context, thanks to the integration of external knowledge sources. By retrieving relevant context or passages, RAG models can generate responses that are more aligned with the surrounding context, leading to more coherent and contextually relevant outputs.

- Improved Informativeness : The incorporation of external knowledge sources enriches the generation process, enabling RAG models to produce more informative responses. Whether it's providing detailed explanations, citing relevant facts, or offering additional insights, RAG-generated text tends to be more informative and comprehensive compared to traditional generative models.

- Domain Adaptability : RAG models can be adapted to specific domains or organizational knowledge bases, making them highly versatile and customizable.

- Cost-effectiveness : Unlike retraining entire language models, implementing RAG does not necessitate extensive model retraining.

- Scalability : RAG frameworks are inherently scalable, capable of handling large volumes of data and accommodating diverse knowledge sources.

- Interpretability : The use of external knowledge sources in RAG models can enhance interpretability by providing transparent sources for generated responses.

Conclusion

Retrieval-Augmented Generation (RAG) represents a groundbreaking approach to text generation in natural language processing, offering a potent blend of retrieval-based methods and generative models. By seamlessly integrating external knowledge sources into the text generation process, RAG models have demonstrated remarkable capabilities in producing contextually relevant, accurate, and informative responses across a wide range of applications.

In conclusion, Retrieval-Augmented Generation represents a transformative paradigm shift in text generation, paving the way for more intelligent, informative, and contextually aware AI systems that truly understand and cater to the needs of users across diverse domains and applications.

Comments (0)

Login To leave a comment on this post

We’re Delivering the best customer Experience